The Symbiosis of

Deep Learning and Differential Equations

DLDE III

NeurIPS 2023 Workshop

Introduction

In the deep learning community, a remarkable trend is emerging, where powerful architectures are created by leveraging classical mathematical modeling tools from diverse fields like differential equations, signal processing, and dynamical systems. Differential equations are a prime example: research on neural differential equations has expanded to include a large zoo of related models with applications ranging from time series analysis to robotics control. Score-based diffusion models are among state-of-the-art tools for generative modelling, drawing connections between diffusion models and neural differential equations. Other examples of deep architectures with important ties to classical fields of mathematical modelling include normalizing flows, graph neural diffusion models, Fourier neural operators, architectures exhibiting domain-specific equivariances, and latent dynamical models (e.g., latent NDEs, H3, S4, Hyena).

The previous two editions of the Workshop on the Symbiosis of Deep Learning and Differential Equations have promoted the bidirectional exchange of ideas at the intersection of classical mathematical modelling and modern deep learning. On the one hand, this includes the use of differential equations and similar tools to create neural architectures, accelerate deep learning optimization problems, or study theoretical problems in deep learning. On the other hand, the Workshop also explores the use of deep learning methods to improve the speed, flexibility, or realism of computer simulations. Responding to demand from last year's attendees, this year's edition will place a special focus on neural architectures that leverage classical mathematical models, such as those listed above.

Important Dates

| Submission Deadline | October 8th, 2023 - Anywhere on Earth (AoE) |

| Final Decisions | October 24th, 2023 - AoE |

| Workshop Date | December 16th, 2023 |

Call for Extended Abstracts

We invite high-quality extended abstract submissions on the intersection of DEs and DL, including but not limited to works that connect to this year's focus area of neural architectures that leverage classical mathematical models (see above). Some examples (non-exhaustive list):

- Using differential equation models to understand and improve deep learning algorithms:

- Incorporating DEs into existing DL models (neural differential equations, diffusion models, ...)

- Analysis of numerical methods for implementing DEs in DL models (trade-offs, benchmarks, ...)

- Modeling training dynamics using DEs to generate theoretical insights and novel algorithms.

- Using deep learning algorithms to create or solve differential equation models:

- DL methods for solving high-dimensional, highly parameterized, or otherwise challenging DE models.

- Learning-augmented numerical methods for DEs (hypersolvers, hybrid solvers ...)

- Specialized DL architectures for solving DEs (neural operators, PINNs, ...).

Submission:

Accepted submissions will be presented during joint poster sessions and will be made publicly available as non-archival reports, allowing future submissions to archival conferences or journals.

Exceptional submissions will be selected for spotlight talks.

Submissions should be up to 4 pages excluding references, acknowledgements, and supplementary material, and should be DLDE-NeurIPS format and anonymous. Long appendices are permitted but strongly discouraged, and reviewers are not required to read them. The review process is double-blind.

We also welcome submissions of recently published work that is strongly within the scope of the workshop (with proper formatting). We encourage the authors of such submissions to focus on accessibility to the wider NeurIPS community while distilling their work into an extended abstract. Submission of this type will be eligible for poster sessions after a lighter review process.

Authors may be asked to review other workshop submissions.

If you have any question, send an email to : [luca.celotti@usherbrooke.ca]

Schedule

(Central Standard Time)

- 08:15 : Introduction and opening remarks

- 08:45 : Philip M. Kim (Keynote Talk) - Machine learning methods for protein, peptide and antibody design.

- 09:30 : Effective Latent Differential Equation Models via Attention and Multiple Shooting (Spotlight)

- 09:45 : Adaptive Resolution Residual Networks (Spotlight)

- 10:00 : Break

- 10:15 : Poster Session 1

- 11:00 : Yulia Rubanova (Keynote Talk) - Learning efficient and scalable simulation using graph networks.

- 11:45 : Can Physics informed Neural Operators Self Improve? (Spotlight)

- 12:00 : Lunch Break

- 13:00 : Michael Bronstein (Keynote Talk) - Physics-inspired learning on graphs.

- 13:45 : Vertical AI-driven Scientific Discovery (Spotlight)

- 14:00 : ELeGANt: An Euler-Lagrange Analysis of Wasserstein Generative Adversarial Networks (Spotlight)

- 14:15 : Albert Gu (Keynote Talk) - Structured State Space Models for Deep Sequence Modeling.

- 15:00 : TANGO: Time-reversal Latent GraphODE for Multi-Agent Dynamical Systems (Spotlight)

- 15:15 : Break

- 15:30 : Poster Session 2

- 16:30 : Closing remarks

Invited Speakers

Philip M. Kim

(confirmed) is a Professor at the University of Toronto affiliated with the Departments of Molecular Genetics and Computer Science, the Faculty of Medicine, the Terrence Donnelly Centre for Cellular and Biomolecular Research, and the Collaborative Program for Genome Biology and Bioinformatics.

His research lies at the intersection of modern computational and experimental approaches in biomedical science, and aims to make applied contributions to patient care and medical therapies while also contributing to fundamental bioscience research.

In particular, he has been prominent in developing the use of cutting-edge machine learning tools to design and validate novel therapeutic compounds and develop new innovative techniques for systems biology and translational science.

[Webpage]

(confirmed) is a Professor at the University of Toronto affiliated with the Departments of Molecular Genetics and Computer Science, the Faculty of Medicine, the Terrence Donnelly Centre for Cellular and Biomolecular Research, and the Collaborative Program for Genome Biology and Bioinformatics.

His research lies at the intersection of modern computational and experimental approaches in biomedical science, and aims to make applied contributions to patient care and medical therapies while also contributing to fundamental bioscience research.

In particular, he has been prominent in developing the use of cutting-edge machine learning tools to design and validate novel therapeutic compounds and develop new innovative techniques for systems biology and translational science.

[Webpage]

Yulia Rubanova (confirmed) is a Research Scientist at Deepmind. She is interested in structured representations of data and including the inductive bias into the deep learning models. She completed her PhD in University of Toronto, supervised by Quaid Morris. She worked on Neural ODE for irregularly-spaced time series (advised by David Duvenaud) and on modelling cancer evolution through time. During her PhD, She did three internships at Google Brain working on optimization of discrete objects in 2019-2020 and DeepVariant in 2018. [Webpage]

Michael Bronstein (confirmed) is the DeepMind Professor of AI at the University of Oxford and Head of Graph Learning Research at Twitter. He was previously a professor at Imperial College London and held visiting appointments at Stanford, MIT, and Harvard, and has also been affiliated with three Institutes for Advanced Study (at TUM as a Rudolf Diesel Fellow (2017-2019), at Harvard as a Radcliffe fellow (2017-2018), and at Princeton as a short-time scholar (2020)). Michael received his PhD from the Technion in 2007. He is the recipient of the Royal Society Wolfson Research Merit Award, Royal Academy of Engineering Silver Medal, five ERC grants, two Google Faculty Research Awards, and two Amazon AWS ML Research Awards. He is a Member of the Academia Europaea, Fellow of IEEE, IAPR, BCS, and ELLIS, ACM Distinguished Speaker, and World Economic Forum Young Scientist. In addition to his academic career, Michael is a serial entrepreneur and founder of multiple startup companies, including Novafora, Invision (acquired by Intel in 2012), Videocites, and Fabula AI (acquired by Twitter in 2019). Recently, he's been working on graph neural diffusion. [Webpage]

Albert Gu (confirmed) is Assistant Professor at the Machine Learning Deptartment at Carnegie Mellon and Chief Scientist at Cartesia. He is interested in structured representations for machine learning including structured linear algebra and embeddings, analysis and design of sequence models with a focus on long context, and non-Euclidean representation learning. He completed his PhD in Stanford, supervised by Christopher Ré. He is best known for his groundbreaking work on SSMs. [Webpage]

Organizers

Stanford University

Borealis AI

University of Cambridge

Université de Sherbrooke

Acknowledgments

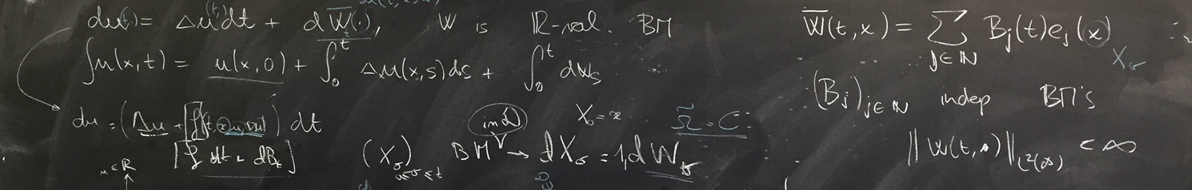

Thanks to visualdialog.org for the webpage format. Thanks to whatsonmyblackboard for the cozy blackboard photo.